(I believe this is good practice to have a general finetunig script for many different models, but it is just way to complicated for me right now and not a good starting point to understand how finetuning works. Combination adds cutting-edge technology that transforms the way assessment and learning content is created, classified, and delivered.

#Finetune airbrush code

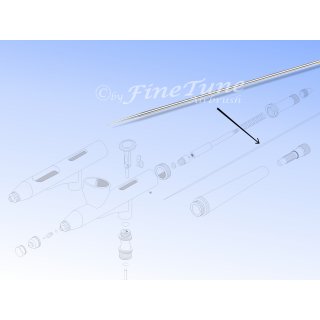

However, the code is very hard to understand for me, on the one hand because I have not used PyTorch Lightning yet and on the other hand because the code is not only for BART but for many different seq2seq models. To run single-node training, follow the instruction in fine-tuning. Dear Facetune, Thanks for the review Posted on. Training time is 2h20m for 90 epochs in 32 V100 GPUs. The actual lr is computed by the linear scaling rule: lr blr effective batch size / 256. They have a script for finetuning (finetune.py) as well as evaluation (run_eval.py). Here the effective batch size is 512 ( batchsize per gpu) 4 ( nodes) 8 (gpus per node) 16384. I also found some huggingface examples for seq2seq here. I tested the pre-trained bart-large-cnn model and got satisfying results. They also have pre-trained models for BART here. FineTune Airbrush: Airbrushhalter 2-4fach - Düse 'F' (0,2mm) für Excalibur-Serie - Düse 'M' für FT-630/ FT-730/ FT-930 - Luftdruck-Feinregulierung. I realize there is this very nice library "huggingface transformers" that I guess most of you already know. Unfortunately, I am a beginner when it comes to PyTorch. Three tip sizes and compatibility with water.

regulator allows fine-tune adjustments and the gravity-fed airbrush gun. The wrench is perfect for making fine tune adjustments and the hanger makes for easy storage of your airbrush. Using higher learning rates and a higher weight decay in line with the values mentioned in the paper. our airbrush compressor kit will take your painting to a new level.ne of the. The best finetuning performance was 91.3 after 24 epochs of training using a learning rate of 1e-7 and weight decay of 0.0001. I want to try BART for Multi-Document Summarization and for this I think the MultiNews dataset would be good. Without finetuning CLIP’s top-1 accuracy on the few-shot test data is 89.2 which is a formidable baseline.

As the title suggests, I would like to finetune a pre-trained BART model on another dataset.

0 kommentar(er)

0 kommentar(er)